Storing Money in Java (and other languages)

I was reading a book about Java and it had this practice question at the end of a chapter:

If you've studied much about Java you'll probably realize what's wrong here, so feel free to share it as a meme. But if not, this is a great learning opportunity.

TL;DR Java has floats and doubles as primitives (doubles hold more), but they both can result in rounding errors when working with fractional amounts. So using either for money or anything else important is a cardinal sin in Java.

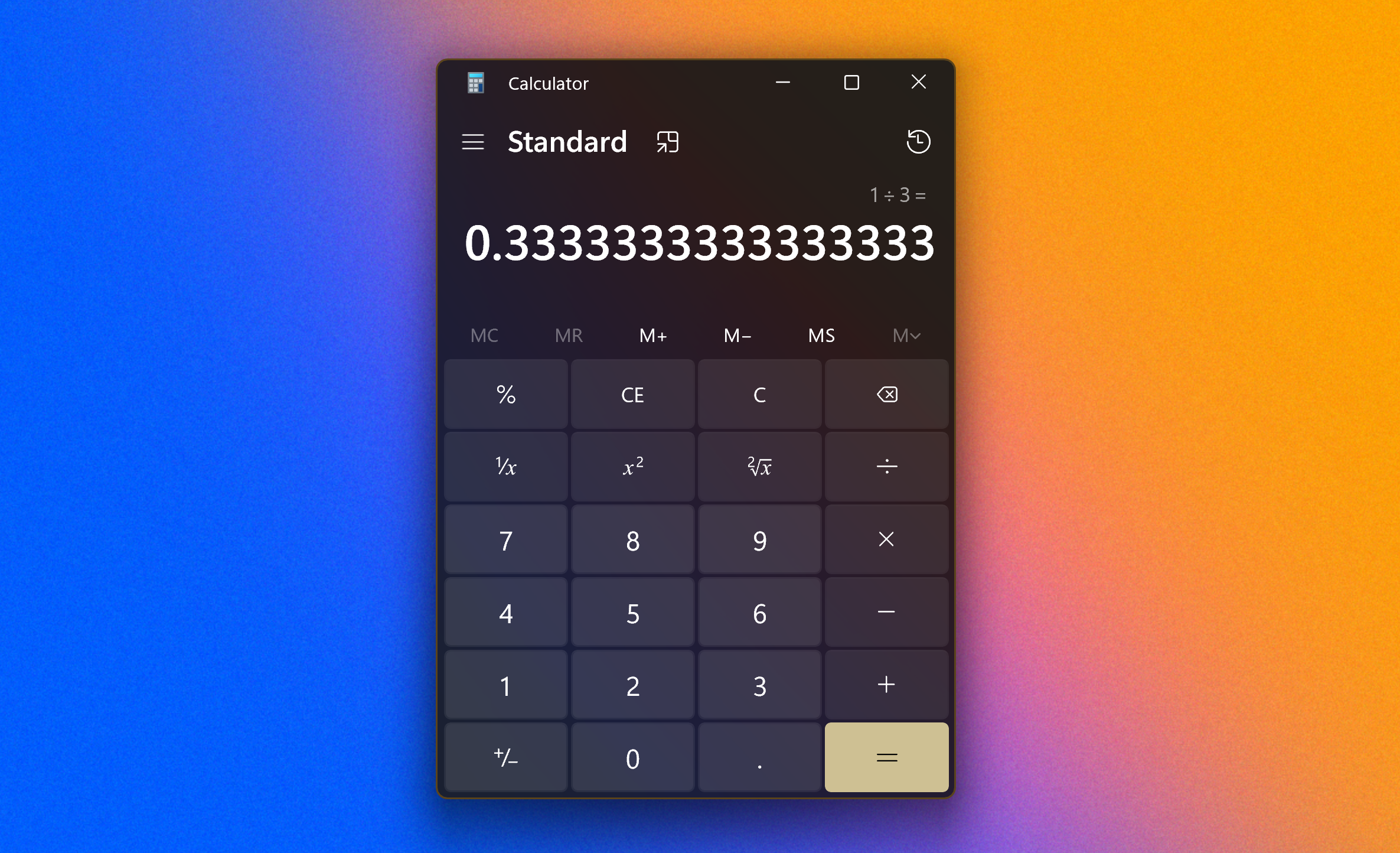

It's the same concept as the fraction 1/3 in base-10 where you get 0.3333[...] . Nothing is ever priced 1/3 of a dollar because we can't do that with money (which is base-10).

The problem comes in with base-2 because the prime numbers that cause the "glitches" are different. If you've studied how computers work on a low level or have just seen the matrix, you may know that computers on their lowest level store data as true and false or better known as 1 and 0.

This true and false data is known as binary which is a base-2 number system (0-1). Humans commonly use decimal (0-9) which is base-10. One of the first things we learn in school when we start learning division is called prime numbers. Prime numbers are numbers greater than one and only divisible by themselves (without a remainder). Base-10 primes include 2, 3, 5, 7, 11, 13, etc. These prime numbers become a problem later in school when we're taught how that the fraction 1/3 in base-10 is a continuous sequence of 3s. In school, we typically cheat by rounding.

The math behind it is that 10's prime factors are 2 and 5, which means that in decimal, any fraction where the denominator is a power of 2 or 5 can be exactly represented in decimal. Binary is similar, but 2's only prime factor is 2. That means in base-2, only fractions where the denominator is a power of 2 can be exactly represented. One tenth (0.1) in base-10, when converted to base-2, results in a problem because it, like 1/3 in decimal, results in an endless repeating fraction. Computers, like humans, don't have infinite memory, so they are designed to do just like we learned to do grade school, they round.

That means that things that make perfect sense with money like buying ten $0.10 candies breaks when represented using a computer (which uses base-2).

To illustrate I've made a quick demo program in Java. Starting at 0, if we add 0.1 ten times, we end up with 0.9999999999999999[...]

I never enjoyed math in school, but that doesn't seem right. Ten dimes should indeed equal one whole dollar. 💵

The solution is typically to either multiply/divide by 100 so that all money is in cents (whole numbers) or using a class called BigDecimal which has more controls for working with decimals. Both options effectively eliminate any challenges with storing money. Some APIs and tools go as far as using cents from the start. Stripe, for example, requires developers to send prices in cents.

So now that you know that storing money in a double is bad you too can enjoy memes about money data types.